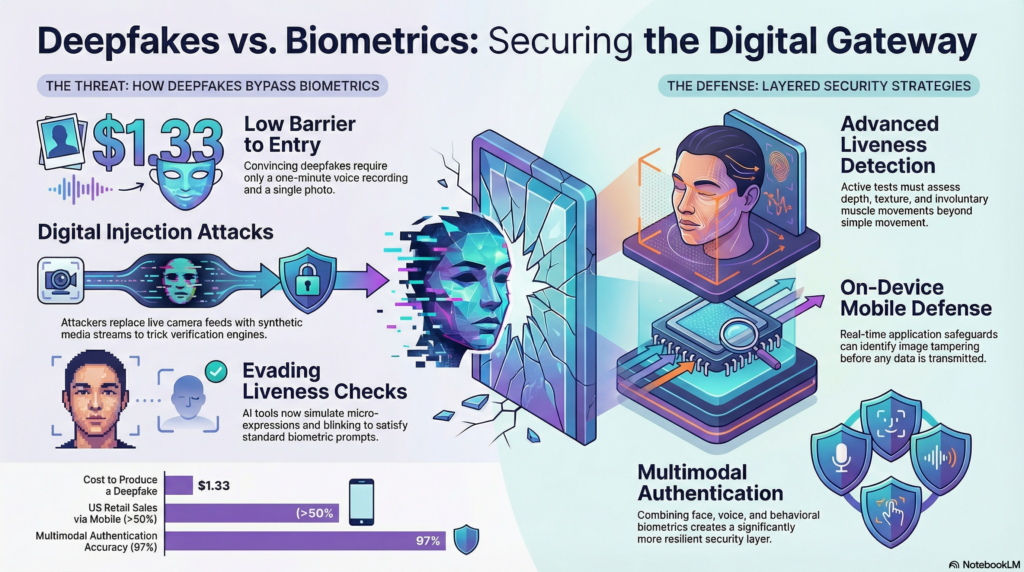

Deepfake attacks have moved from online spectacle to real operational threat. Facial recognition now sits at the center of this shift. With fraud cases related to AI-generated videos on the rise, security teams can no longer assume that Face ID or facial logins always prove someone’s identity. Here is how threat actors are systematically bypassing what was once treated as a reliable biometric control.

Why Facial Recognition Is Under Pressure

The pressure on facial recognition is rising because it has become the default security gatekeeper on mobile devices, where a massive volume of high-value activity now occurs. As consumer life shifts to mobile — with projections showing that by 2027, over 50% of retail ecommerce sales in the United States will be from mobile devices — so do the opportunities for fraud. This reliance on mobile commerce is made possible by the convenience of built-in facial authentication, which is now critical for banking, payments and identity verification.

Attackers are well aware of this reliance and deliberately target biometric entry points, which serve as critical gateways for account access. The barrier to entry remains low, with an average cost of just $1.33 to produce a deepfake despite the trillions of dollars at stake as intelligence-assisted fraud generates global losses.

The escalating scale of content generation further compounds the threat. With the tools available today, anyone can create a convincing video with just a one-minute voice recording and a photo. These deepfakes can accelerate loan or high-value transaction approvals. This accessibility transforms the threat from a niche problem into a scalable challenge that can overwhelm authentication systems at speeds and volumes never seen before.

How Deepfakes Defeat Facial Recognition

To conduct a deepfake, threat actors systematically bypass facial recognition controls throughout the verification process.

Reconstructing Identities Through Stolen Biometric Data

Attackers begin by collecting images and voice samples from social media, breached identity documents and dark web datasets. These inputs feed generative models that rebuild facial geometry and speech patterns. The output includes realistic expressions and lip-synced movements that mirror natural behavior.

Injecting Synthetic Media Into Verification Sessions

Attackers then introduce synthetic media into live verification flows. Some use virtual camera injection, where the live camera feed is replaced with a pre-generated or real-time deepfake stream. Others target image buffers during processing. The facial recognition engine receives altered frames while the user interface appears unchanged.

Manipulating Data Streams With Digital Injection Attacks

Digital injection attacks target the communication layer between the device and the server. Synthetic biometric data is entered into the session before liveness checks analyze it. Some tools simulate subtle head movement, blinking and facial micro-expressions to satisfy basic liveness prompts and evade standard controls. Once the manipulated media is accepted as legitimate, the system grants access.

Detection and Mitigation Strategies

Defense teams must deploy layered countermeasures that address generation speed and delivery methods.

1. Advanced Liveness Detection

Active challenge-response tests that assess depth, texture and involuntary muscle movements significantly improve security. Passive methods, such as analyzing reflection patterns and camera noise artifacts, provide additional safeguards. When face, voice and behavioral biometrics are combined, multimodal authentication systems have demonstrated up to 97% accuracy in controlled environments.

2. Reinforced Biometric Protocols

For high-value transactions, additional checks, such as confirming on a trusted device or using digital signatures, should be required. Time delays for large transfers can help stop social engineering attacks. Watching how users type and move through the site after they log in can also help confirm their identity. Some insurers are adopting biometrics to reduce billing fraud, making it more difficult for individuals to share insurance cards or impersonate others.

3. Mobile Application Defense

On mobile devices, safety measures should function within the application itself. Real-time, on-device detection can prevent virtual camera feeds and identify image tampering before any data is transmitted. AI-based identification systems are regularly updated using federated learning, allowing them to recognize new manipulation techniques while maintaining user privacy.

4. Policy and Regulatory Awareness

The European Union AI Act introduced transparency requirements for AI-generated content in August 2024. It also sets a clear timeline for high-risk AI systems that could impact safety, rights or access to essential services.

In the U.S., the Financial Crimes Enforcement Network warned about deepfake fraud in late 2024 as suspicious activity reports involving fake media increased. Security leaders who follow these updates can keep their technical safeguards aligned with evolving compliance requirements and threat landscapes.

Outsmart AI Impersonators With Layered Security

Malicious actors will continue to leverage AI wherever they can. Security teams need to stay ahead of AI-driven impersonation by focusing on what makes real humans unique — liveness, reinforced biometrics and real-time mobile defenses — so that facial recognition remains reliable even in the facade of deepfake threats.

Devin Partida is a frequent contributor to Brilliance Security Magazine, an industrial tech writer, and the Editor-in-Chief of ReHack.com, a digital magazine for all things technology, big data, cryptocurrency, and more. To read more from Devin, please check out the site.

.

.

Additional Resource

Video Overview

Follow Brilliance Security Magazine on LinkedIn to ensure you receive alerts for the most up-to-date security and cybersecurity news and information. BSM is cited as one of Feedspot’s top 10 cybersecurity magazines.